What is black box AI?

Black box AI refers to any artificial intelligence system whose inputs and activities are not accessible to the user or any interested party. A black box, in general, is an impenetrable mechanism.

Black box AI models form conclusions or make decisions without explaining how they arrived there.

As AI technology has advanced, two distinct types of AI systems have emerged: black box AI and explainable (or white box AI). The term black box refers to systems that are opaque to users. Simply described, black box AI systems are those whose internal workings, decision-making workflows, and contributing elements are hidden from human users.

The lack of transparency makes it difficult for humans to understand or explain how the system’s underlying model comes to its decisions. Black box AI models may also cause issues with flexibility (updating the model as needs change), bias (incorrect results that may offend or harm certain groups of humans), accuracy validation (hard to validate or trust the results), and security (unknown flaws render the model vulnerable to cyberattacks).

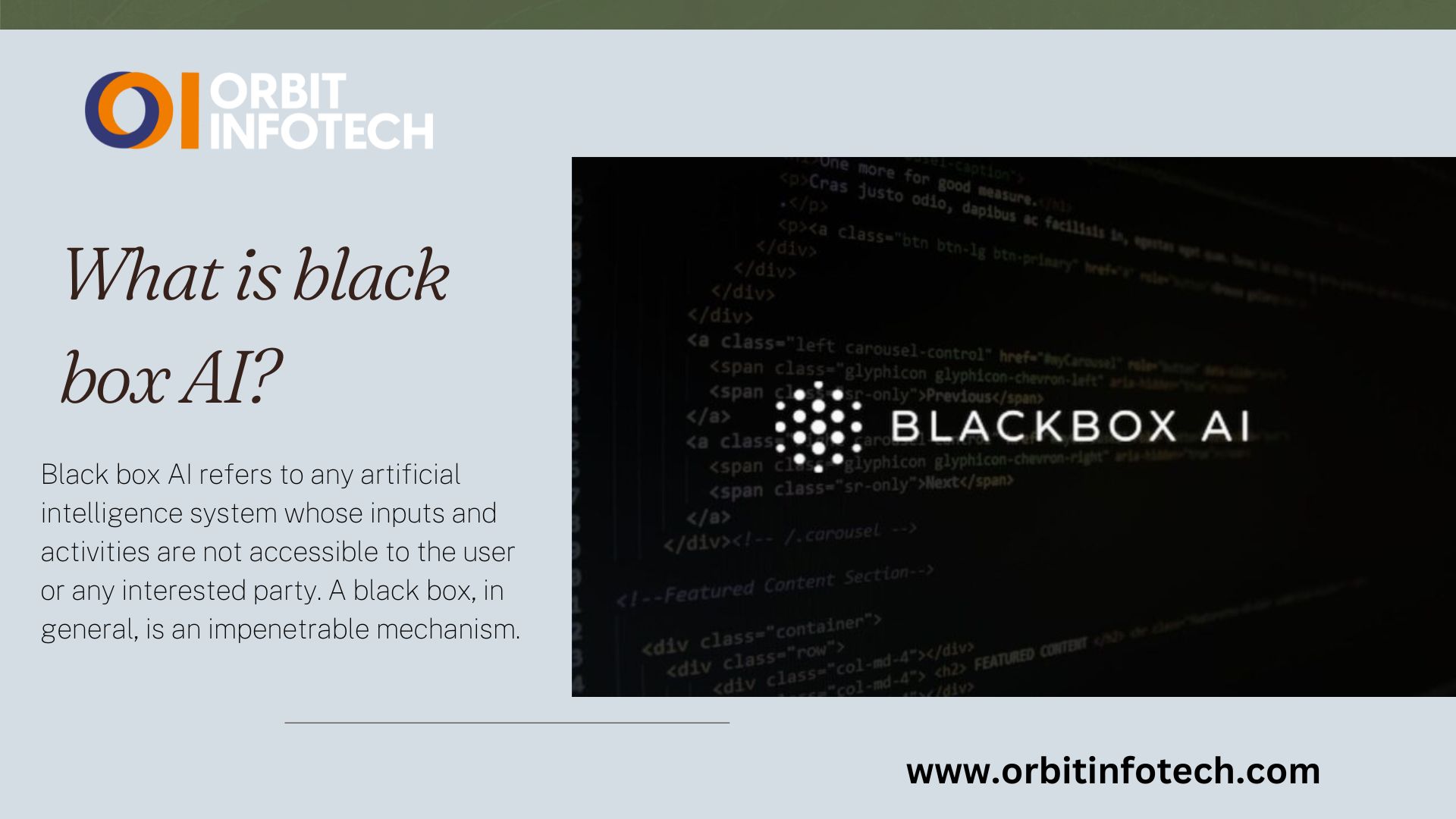

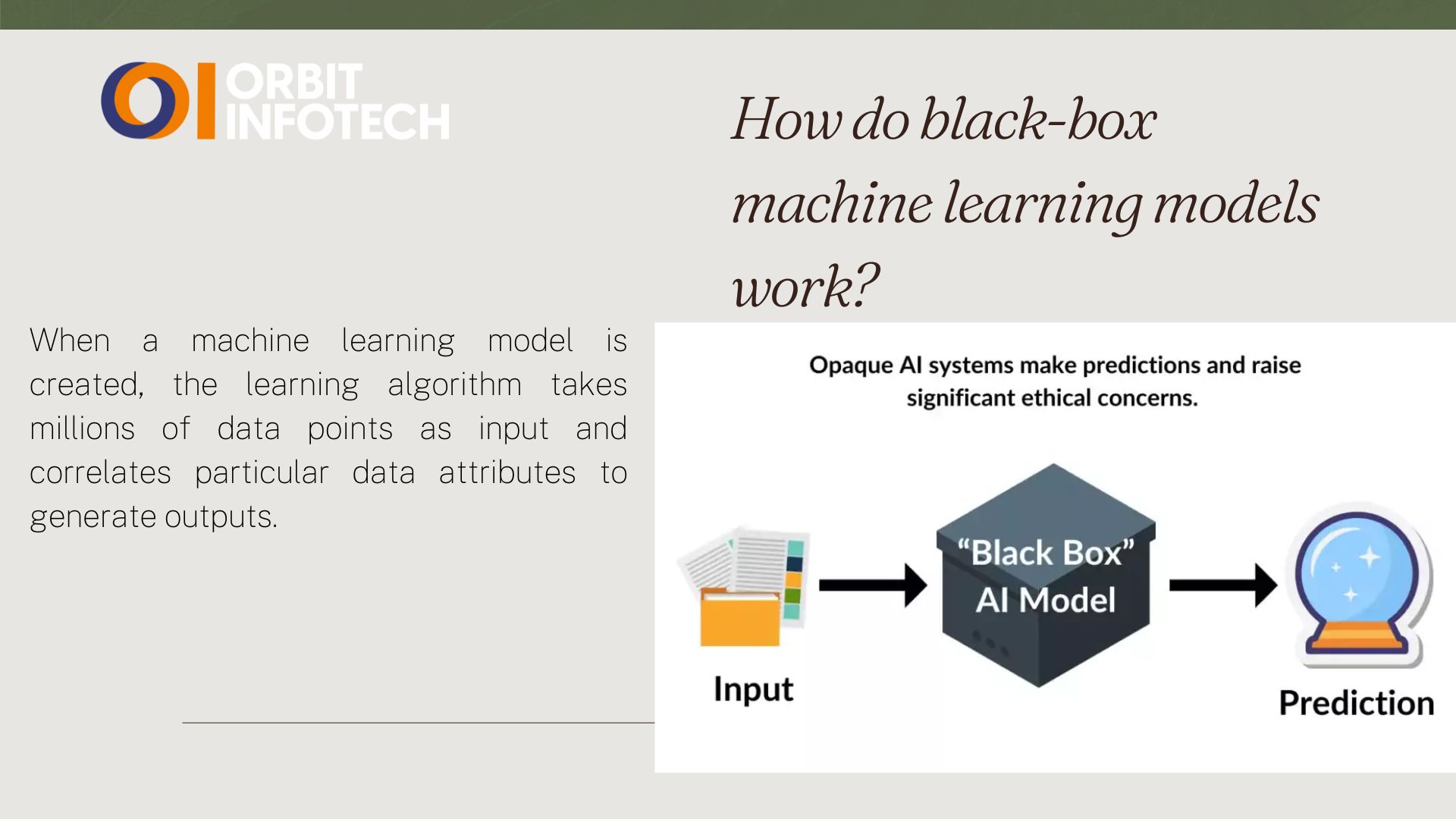

How do black-box machine learning models work?

When a machine learning model is created, the learning algorithm takes millions of data points as input and correlates particular data attributes to generate outputs.

Also Read This article

What Is ChatGPT? Everything You Need to Know

The method typically involves the following steps:

Sophisticated AI systems search large data sets for patterns. To accomplish this, the algorithm consumes a vast number of data instances, allowing it to experiment and learn independently through trial and error. As the model accumulates more training data, it self-learns to adjust its internal parameters until it can accurately predict the outcome for fresh inputs.

As a result of this training, the model is now able to generate predictions based on real data. Fraud detection using a risk score is one application for this technology.

The model scales its method, approaches, and body of knowledge, producing increasingly better results as more data is acquired and input into it over time.

In many circumstances, the inner workings of black box machine learning models are not easily accessible and are mainly self-directed. This is why it is difficult for data scientists, programmers, and consumers to grasp how the model makes predictions or to believe the accuracy and authenticity of its outputs.

How do black-box deep learning models work?

Many black box AI models are based on deep learning, a subset of AI (specifically, machine learning) in which multilayered or deep neural networks are utilized to emulate the human brain and its decision-making abilities. Neural networks are made up of several layers of interconnected nodes known as artificial neurons.

In black box models, these deep networks of artificial neurons distribute input and make decisions across tens of thousands or more neurons. The neurons collaborate to digest the input and detect patterns within it, allowing the AI model to generate predictions and reach specific judgments or responses.

Also Read This Guide:- How To Start an Online Store in 2024

These predictions and decisions produce a level of complexity that can be comparable to that of the human brain. Humans, like machine learning models, struggle to determine a deep learning model’s “how,” or the particular steps it took to create such predictions or judgments. For all of these reasons, deep learning systems are referred to as “black box AI systems.”

Issues with the Black Box AI

While black box AI models are suitable and beneficial in many situations, they can cause a number of drawbacks.

1. AI Bias

AI bias can be introduced into machine learning algorithms or deep learning neural networks as a result of deliberate or unconscious prejudice on the part of developers. Bias can also be introduced by unnoticed errors or from training data when specifics about the dataset are overlooked. Typically, the outcomes of a biased AI system will be distorted or plain erroneous, potentially in an insulting, unjust, or even deadly manner to particular persons or groups.

Example

An AI system for IT recruitment may leverage past data to assist HR personnel in selecting candidates for interviews. However, because most IT staff have historically been male, the AI system may use this knowledge to recommend only male candidates, even if the pool of potential candidates contains skilled women. Simply said, it shows a predisposition toward male applicants while discriminating against female applicants. Similar concerns may arise with other groups, such as candidates from specific ethnic groups, religious minority, or immigrant populations.

A system that recognizes someone cooking as feminine even when a guy is presented is an example of gender bias in machine learning.

With black box AI, it is difficult to determine where the bias is coming from or whether the system’s models are unbiased. If the system’s intrinsic bias produces repeatedly distorted findings, the organization’s reputation may suffer. It may potentially result in legal action for discrimination. Bias in black box AI systems can potentially have a societal cost, including marginalization, harassment, unjust imprisonment, and even injury or death of specific groups of people.

To avoid such negative repercussions, AI engineers must include transparency in their algorithms. It is also critical that they follow AI regulations, accept responsibility for mistakes, and pledge to promote responsible AI development and use.

In some circumstances, tools such as sensitivity analysis and feature visualization can be utilized to provide insight into how the AI model’s internal processes operate. Despite this, most of these procedures remain opaque.

2. A lack of transparency and accountability.

Even if black box AI models deliver accurate results, their complexity might make it difficult for engineers to fully comprehend and verify them. Some AI scientists, even those who contributed to some of the most significant achievements in the field, are unsure how these models function. Such a lack of understanding reduces transparency and undermines accountability.

These challenges can be particularly difficult in high-risk industries such as healthcare, finance, the military, and criminal justice. Because the choices and decisions made by these models cannot be trusted, the consequences for people’s lives can be far-reaching, and not always in a positive way. It can also be difficult to hold people accountable for the algorithm’s decisions if it uses murky models.

3. Lack of flexibility.

Another major issue with black box AI is a lack of adaptability. If the model needs to be altered for a different use case, such as describing a different but physically similar object, determining the new rules or bulk parameters for the update could be time-consuming.

4. It is difficult to validate results.

Black box AI delivers outcomes that are difficult to confirm and repeat. How did the model get to this particular result? Why did it arrive at this result and not another? How can we know if this is the best or most right answer? It is nearly impossible to obtain answers to these issues and rely on the resulting data to support human actions or decisions. This is one of the reasons why it is not recommended to process sensitive data with a black box AI model.

5. Security flaws

Black box AI models frequently contain weaknesses that threat actors can use to manipulate input data. For example, they could alter the data to influence the model’s conclusion, resulting in erroneous or even dangerous decision. Because there is no way to reverse engineer the model’s decision-making process, it is nearly impossible to prevent it from making poor choices.

It is also difficult to uncover further security flaws affecting the AI model. One common blind spot is generated by third parties who have access to the model’s training data. If these parties fail to use good security procedures to protect the data, it will be difficult to keep it out of the hands of cybercriminals, who may acquire unauthorized access to modify the model and falsify its conclusions.

When should black-box AI be used?

Although black box AI models provide numerous obstacles, they also provide the following benefits:

- Increased accuracy. Complex black box systems may have higher prediction accuracy than more interpretable systems, particularly in computer vision and natural language processing, since they may detect detailed patterns in data that people cannot see. Despite this, the algorithms’ precision makes them extremely complicated, which can make them less transparent.

- Rapid conclusions. Black box models are frequently built on a predetermined set of rules and equations, making them quick to run and simple to optimize. For example, computing the area under a curve with a least-squares fit procedure may yield the correct result even if the model lacks a complete knowledge of the situation.

- Minimal computational power. Many black box models are rather simple, therefore they do not require a lot of computer resources.

- Automation. Black box AI models can automate complex decision-making processes, hence decreasing the need for human involvement. This saves time and resources while boosting the efficiency of procedures that were previously done manually.

In general, the black box AI technique is employed in deep neural networks, where the model is trained on vast quantities of data and the algorithms’ internal weights and parameters are updated as needed. Such models are useful in applications that need accurate and speedy data classification or identification, such as picture and speech recognition.

Read This Guide:- What is API And How to Use It

Black box AI versus white box AI

Black box AI and white box AI are two distinct ways to constructing AI systems. The approach chosen is determined by the unique uses and aims of the final system. White box AI is often referred to as explainable AI or XAI.

The level of explainability distinguishes white box and black box models.

XAI is designed so that a typical person may understand its logic and decision-making process. In addition to comprehending how the AI model works and how it arrives at specific answers, human users can trust the AI system’s results. For all of these reasons, XAI is the opposite of black box AI.

A black box AI system’s inputs and outputs are understood, but its underlying workings are opaque and difficult to understand. White box AI is open about how it reaches its conclusions. Its findings are also interpretable and explainable, allowing data scientists to investigate a white box algorithm and understand how it behaves and what variables influence its decision.

Because the core workings of a white box system are visible and easily understood by users, this method is frequently employed in decision-making applications like medical diagnosis or financial analysis, where it is critical to understand how the AI arrived at its conclusions.

Explainable or White box AI is the preferred AI type for a variety of reasons.

One, it allows the model’s developers, engineers, and data scientists to audit the model and ensure that the AI system is functioning properly. If not, they can identify the improvements required to improve the system’s output.

Two, an explainable AI system enables individuals affected by its output or decisions to contest the results, particularly if the outcome is the product of an AI model’s inherent bias.

Third, explainability makes it easier to guarantee that the system complies with legal norms, many of which have evolved in recent years to mitigate the negative effects of AI (the EU’s AI Act is a well-known example). These include hazards to data privacy, AI hallucinations resulting in inaccurate output, data breaches impacting governments or organizations, and the proliferation of audio or video deepfakes, which disseminate misinformation.

Here are a few of the many AI-related regulations that are now in effect or being proposed.

Finally, explainability is critical for the implementation of responsible AI. Responsible AI refers to AI systems that are safe, transparent, accountable, and employed in an ethical manner to deliver trustworthy, dependable results. The purpose of responsible AI is to deliver helpful outcomes while minimizing harm.

Here’s an overview of the distinctions between black box AI and white box AI:

- Black box AI is typically more precise and efficient than white box AI.

- White box AI is more easily understood than black box AI.

- Black box models include boosting and random forest models, which are very nonlinear and difficult to explain.

- White box AI is easier to debug and troubleshoot because it is transparent and interpretable.

- White box artificial intelligence models include linear, decision trees, and regression trees.

More on responsible AI

Responsible AI refers to AI that is ethically and socially responsible in its development and application. RAI is about holding the AI algorithm accountable before it produces outcomes. RAI’s guiding principles and best practices are intended to mitigate the negative financial, reputational, and ethical risks that black box AI can provide. In doing so, RAI can benefit both AI makers and AI users.

Responsible AI refers to AI that is ethically and socially responsible in its development and application. RAI is about holding the AI algorithm accountable before it produces outcomes. RAI’s guiding principles and best practices are intended to mitigate the negative financial, reputational, and ethical risks that black box AI can provide. In doing so, RAI can benefit both AI makers and AI users.

AI practices are considered responsible if they comply to the following principles:

- Fairness. The AI system is fair to all people and demographic groupings, and it does not perpetuate or exacerbate existing biases or discrimination.

- Transparency. The system is simple to understand and explain to both its users and those it will impact. Furthermore, AI developers must explain how data used to train an AI system is gathered, stored, and used.

- Accountability. The companies and individuals that develop and use AI should be held accountable for the AI system’s judgments and conclusions.

- Ongoing development. Continuous monitoring is required to verify that outputs are continually aligned with moral AI ideals and social standards.

- Human oversight. Every AI system should be constructed so that humans may monitor and intervene as necessary.

When AI is both explainable and responsible, it is more likely to have a positive impact on humans.

Recent Comments